![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

65 Cards in this Set

- Front

- Back

|

6.1 What are requirements for the transmission? |

- Quality as high as possible |

|

|

6.1 What is idea underlying speech and audio coding? |

- Compression of the signal |

|

|

6.1 What are the classes of speech coding? |

1. Waveform coding. ~ Bandwidth 64 kBit/s |

|

|

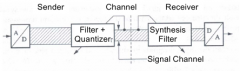

6.1 What is the principle of waveform coding? |

Reduces the information already in the sender by quantization. |

|

|

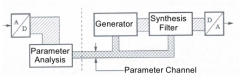

6.1 What is the principle of parameter coding? |

Parameters are transmitted, from which the speech signal may be synthesized on the receiving side. |

|

|

6.1 Which type of coding tries to reproduce speech signal? |

Waveform coding |

|

|

6.1 What are advantages and disadvantages of discrete signal representation? |

Advantages: |

|

|

6.1 What is aliasing? And how to deal with it? |

Aliasing is an effect that causes different signals to aliases of one another when sampled. |

|

|

6.2 What is Pulse Code Modulation (PCM)? |

Pulse Code Modulation is used by linear quantization. When the amplitude values are collected within equidistant intervals. In data items of the word length w this leads to a range of values of 2^w possibilities |

|

|

6.2 What clipping effect is about? |

Clipping is a form of distortion that limits a signal once it exceeds a threshold. |

|

|

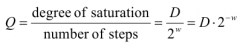

6.2 How do we calculate quantization steps in PCM? What is the maximum error we can get? |

Quantization steps Q accrue from the maximal dynamic range D (this is the range within which analog signal amplitudes may take on values) and the number of steps 2^w |

|

|

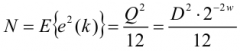

6.2 What is quantizing noise? How do we calculate it? |

Power of the error signal e(k) (quantizing noise) with a signal of equal distribution x(k). |

|

|

6.2 What is SNR? What is important to note from the formula? |

SNR with linear quantization: |

|

|

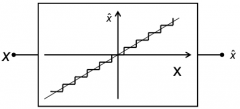

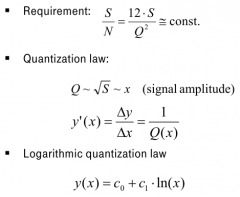

6.2 What is the aim of non-linear quantization? |

Removing the dependency on the degree of saturation |

|

|

6.2 What is logarithmic companding and why do we need to use it? |

Since logarithmic quantization characteristic line is difficult to implement in an analog to digital converter. |

|

|

6.2 What is the problem with logarithmic quantization? How we can solve it? |

|

|

|

6.2 What is idea of optimal quantization? |

Quantizing more frequent values (around zero) with more precision – i.e. with more steps – than rare values (with high amplitudes) |

|

|

6.2 How do we calculate quantization interval in optimal quantization method? |

|

|

|

6.2 What is the peculiarity of the optimal quantization method? |

• Representatives are "centers of gravity“ of the intervals |

|

|

6.2 What is idea of adaptive quantization? |

Adaptation of the step height of the quantizer to the momentary degree of saturation -> reduces the dependency on the degree of saturation in a dynamic way |

|

|

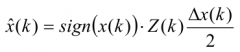

6.2 How do we calculate quantized values in adaptive quantization method? |

|

|

|

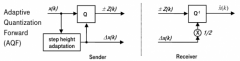

6.2 What is the principle of Adaptive Quantization Forward (AQF)? |

The step height ∆x(k) is calculated in blocks for signal sections of the length of N, and then retained for this section. |

|

|

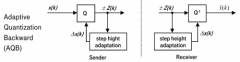

6.2 What is the principle of Adaptive Quantization Backward (AQB)? |

The necessity of transmitting the step height is no longer required, as this information may be retrieved from the transmitted signal Z(k) (as long as the transmission functions error-free). |

|

|

6.2 How do we calculate step height and estimation of the variance in Adaptive Quantization? |

|

|

|

6.2 Which type of quantization makes the SNR independent of the signal amplitude? |

Logarithmic quantization |

|

|

6.2 What is idea of vector quantization? |

|

|

|

6.2 Why are we doing vector quantization? |

- In case the codebook is known not the full code vectors, but only their indices (addresses) need to be transmitted |

|

|

6.2 What are disadvantages of vector quantization? |

• Computationally intensive, as a full comparison needs to be performed |

|

|

6.3 What are the possibilities to reduce the bitrate? |

• Redundancy reduction: Everything not containing information is omitted in the transmission. |

|

|

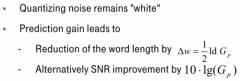

6.3 What difference PCM is about? (1 Approach) How we can improve it? |

Due to the correlation between single sampling values it is convenient not to transmit the sampling value itself but the difference to the preceding sampling value. By this calculation of difference a signal may be obtained, which modulates the quantizer less heavily, and which therefore may be transmitted using a minor word length (bit rate). |

|

|

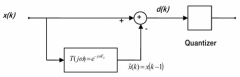

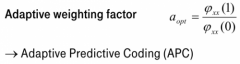

6.3 What is Adaptive Predictive Coding (APC)?

|

The weighting factor a may of course be also adjusted adaptively, either adaptively in blocks or sequentially.

In Adaptive Predictive Coding (APC) we choose the optimal coefficient (optimal, here, with respect to the mean square error) i.e. the relationship of the auto-correlation functions in shift from one sampling value to the auto-correlation of zero. |

|

|

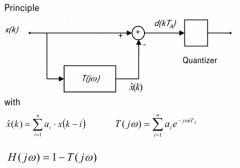

6.3 What is idea and principle of linear prediction? |

Transmitting a weighted difference between several preceding sample values. |

|

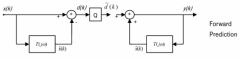

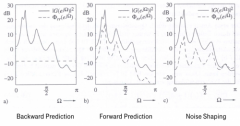

6.3 Forward prediction |

|

|

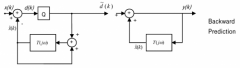

6.3 Backward prediction |

|

|

|

6.3 Which type of prediction provides a better SNR? |

Backward prediction |

|

|

6.3 Which type of prediction provides a more speech-like shaped prediction error? |

Forward prediction |

|

|

On the left side (backward prediction) we find white quantizing noise, which exceeds the signal in low energy signal sections, - but which is notably below the useful signal in the sections with high energy. |

|

|

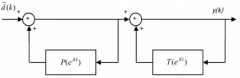

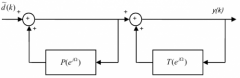

6.3 How do we do a noise-shaping? |

Between two extremes, the noise may be manipulated optimally with respect to its spectrum by a filter downstream to the forward predictor, so that |

|

|

6.3 What is Adaptive Differential Pulse Code Modulation (ADPCM) about? |

Transmission of the adaptively weighted difference between several preceeding sample values with |

|

|

6.3 How much can the necessary bandwidth be reduced by ADPCM compared to log PCM? |

To a half |

|

|

6.4 What is the difference between parametric coding and waveform coding? |

- Waveform codecs transmit the speech signal (or residuals thereof) |

|

|

6.4 What is idea of parametric coding? |

• Model-based recapitulation of the speech production process |

|

|

6.4 Which information is contained in the parameters of parametric coders? |

1. Vocal tract (a_i or formants) |

|

|

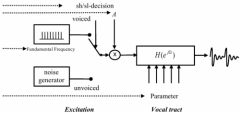

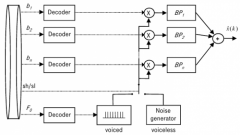

6.4 Describe the principle of the synthesis part of a vocoder? |

|

|

|

6.4 How is vocal tract information represented in a channel vocoder? |

Bandpass filters |

|

|

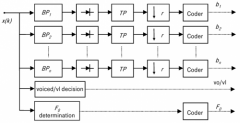

6.4 Describe the principle of the sending part of a channel vocoder? |

The sending part tries to build the vocal tract in terms of several parallel bandpass filters. |

|

|

6.4 Describe the principle of the receiving part of a channel vocoder? |

|

|

|

6.4 What are features of the Formant vocoder? |

• Similar to the channel vocoder, but representation of the vocal tract via |

|

|

6.4 What are disadvantages of channel and formant vocoder? |

Low Necessary bit-rate: 0,5...1,2 kbit/s |

|

|

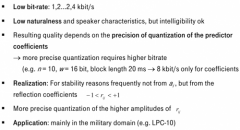

6.4 What are features of the Prediction vocoder? |

- Description of the vocal tract via linear prediction |

|

|

6.4 Describe the principle of Prediction vocoder. |

For a parametric description also can be used the principle of linear prediction. Instead of a difference signal, which does recreate the excitation signal of the vocal tract filter in an ideal prediction, this signal is now generated artificially, namely by |

|

|

6.4 Name characteristics of the prediction vocoder |

|

|

|

6.5 What is a coding gap? What are ideas for filling it? |

Difference between bit-rate in waveform and parametric coding. |

|

6.5 Why do we use short-term and long-term prediction? |

On the most hybrid coders use in addition to the short-time LPC analysis a long-time predictor, which models the periodicity of the excitation signal in voiced segments. |

|

|

6.5 Which kinds of quantization are used in Hybrid coding? |

• Scalar: forward and backward prediction, potentially with noise shaping |

|

|

6.5 What is idea of scalar quantization? |

• In LPC-based coding, the excitation signal should show |

|

|

6.5 Which coding principle is used in the GSM fullrate codec? |

Baseband-RELP |

|

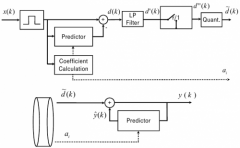

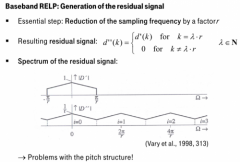

6.5 Describe the principle of baseband RELP (residual excitated linear prediction)

|

1. Windowing

2. Uses predicitor to calculate the difference 3. Transmit the simplify version of signal - lower band in frequency domain 4. Subsampling with reduction by the factor r 5. Quantization |

|

|

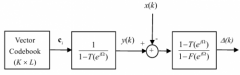

6.5 What is the basic principle of CELP (Code-Excited Linear Prediction) coding? |

Vector quantization |

|

|

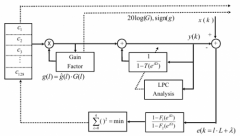

6.5 Explain the flow of CELP coding |

|

|

|

6.6 What are the steps to do coding in frequency domain? |

1. Spectral analysis |

|

|

6.6 How can we do Spectral analysis for coding in frequency domain? |

- Via filterbank |

|

|

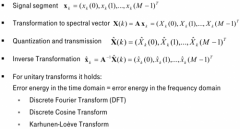

6.6 Explain the flow of Transform coding |

|

|

|

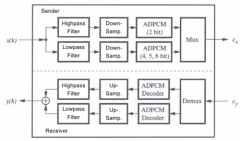

6.6 Explain the flow of Sub-Band coding |

|

|

|

6.7 Name criterias for codec selection |

1. Speech quality |