![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

16 Cards in this Set

- Front

- Back

|

What is back propagation? |

When the correct output is not predicted in a connectionist model, the error signal is relayed back to the previous connections and the weights of the connections change in line with new predictions |

|

|

What do the hidden representations in connectionist models do? |

They allow the model to re-represent the information present in the previous network layer to make the information more helpful in producing the correct output. |

|

|

What is meant by 'distributed representations'? |

The network's internal representations are distributed across units in terms of the extent to which various things apply. e.g. One unit might fire a lot for birds, less so for mammals and less so for flowers. The 'fingerprint' of each concept is the unique combination of the different levels of activation of each of the units in the network when that concept is represented. |

|

|

How are semantic similarities represented in a distributed network? |

Similar patterns of neuronal activation will occur for each with many units showing the same levels of activation for each and only a few discrepancies differentiating the two concepts. |

|

|

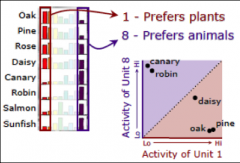

How can network activity be graphed to show semantic similarities? |

At the basic level comparisons can be done between just two units - by plotting the activity level of one against the other, various representations can be plotted on these axis and their spatial proximity on the graph gives an indication of their semantic similarity.

This method can be extrapolated into far more than just 2D graphical representations and so many more than two units can be used.

|

|

|

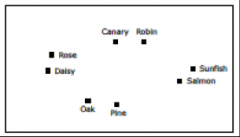

What is multi-dimensional scaling? |

A simple 2D reduction of complex multidimensional graphs showing the distance structure between concepts when plotting according to the activity levels of multiple units.

The location of the input = their activity pattern Distance between inputs = similarity of their activity patterns

|

|

|

What is meant by 'graded' representations |

Similarities and differences in the semantics of different items can be represented. |

|

|

Describe how Rogers and McClelland, 2004, demonstrated semantic hierarchies in error-driven learning using a computer programme. |

1. Model is given no starting information about the properties of the input items 2. Error signals are produced by having subtly different internal representations between broad distinctions. 3. When broad distinctions are learnt, error signals signalling finer category distinctions are focussed on. 4. Over time there is gradual progressive differentiation between representations of broad categories to finer categories. |

|

|

How did Rogers and McClelland simulate semantic dementia in their connectionist model of semantic knowledge?

Give a criticism of their method. |

They introduced 'noise' in the hidden layer by randomly perturbing some of the input signals and found that specific output was less accurate but general property knowledge was retained.

These random perturbations give the right effect but may not reflect the actual processes occurring in SD |

|

|

How do connectionist models deal with exceptions and atypical group members? |

1. Initial predictions will yield huge error signals if the item is highly atypical of the group to which it belongs. 2. Over time, due to these error signals and back propagation the network learns to represent the anomaly differently and shifts it away from the prototypical examples towards the outer regions of the subgroup. |

|

|

Need the ultimate prototype of a category actually exist? |

No, it is a reference point and a hodgepodge of typical features. |

|

|

How does item frequency affect categorisation? |

Parents tend to use basic-level terms more often than sub- or super-ordinate and this is picked up by connectionist models in terms of connection strength.

High frequency of exposure to a concept means it will be learned more quickly, making them robust to noise and damage (e.g. from SD later in life) |

|

|

What is meant by saying that connectionist representations are 'rich and flexible' |

Due to the multi-dimensional representation and the ability to represent rules as well as exceptions. |

|

|

What are the effects of 'coherent covariation'? |

1. not just shared property representations 2. regular co-occurence of sets of properties across different objects allows for distributed patterns of activity that reflect similarities and differences |

|

|

What are the two main strengths of the PDP network model? |

1. Domain-general learning - extracts structure form the environment - can thus be used across many domains

2. Neurally-inspired modelling - allows us to think about how the representations of semantic knowledge might be implemented in neural networks |

|

|

What are the two main problems with the PDP network model? |

1. The model is oversimplified - It has built-in items (how would you have the concept of a dog without knowing anything about it) - the model is explicitly told the right answer when it makes errors - doesn't represent things like causality

2. Lacks explanation of how knowledge is actually used - how do we combine concepts? - how do we draw inferences? - how do we make analogies? |