![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

22 Cards in this Set

- Front

- Back

|

Labels |

What the computer code is trying to predict (eg if an email is spam or not, or if a set of particles is a jet or not) |

|

|

Features |

Input variables to predict label, eg. Words in an email |

|

|

Example |

Piece of data, Labeled has a label and feature Unlabled has feature only |

|

|

Model |

Thing that does the predicting, ie maps feature, x, to a predicted label y' |

|

|

Regression model Classification model |

Regression: predicts continuous variables Classification: predicts discrete values |

|

|

Emperical risk minimization |

Training a model to reduce loss |

|

|

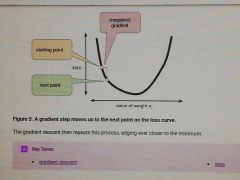

Gradient decent algorithm |

Pick a random w1 value. Calculate gradient of loss vs w graph at that point. Pick a new point that moves as a fraction of the gradient vector. |

|

|

Learning rate and optimum learning rate |

Learning rate: contant to multiply the gradient to get a new point Optimum 1D = is 1/f"(x) 2+D = inverse of Hessian (matrix of 2nd Partial Derivatives) ! For general convex functions, story is more complex |

|

|

All module imports for creating (basic) machine learning model with tensor flow |

|

|

|

Stochastic gradient decent (SGD) Mini batch gradient decent (mini-batch SGD) |

SGD = Batch size of 1 mbSGD = typically 10 to 1000 examples chosen at random |

|

|

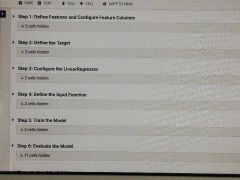

Building a model with tensorflow, all steps |

|

|

|

Step 1: define features and configure feature columns |

|

|

|

Step 2: define the target |

|

|

|

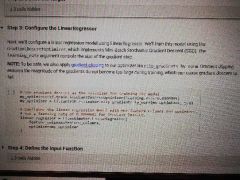

Step 3: configure linear regressor |

|

|

|

Step 4 |

|

|

|

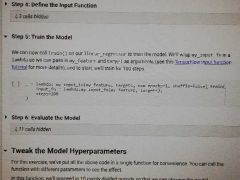

Input function |

|

|

|

Step 5 (actual training function with tensor flow) |

|

|

|

Step 6 evaluation and prediction |

|

|

|

One-hot encoding Multi-hot encoding |

One hot Make a feature of strings into a vector where each dimension represents a possible string (oov- out of vocabulary- is every string not accounted for, eg "hdkehijrla" for a road name) Each vector is a 1 or 0 (bool) for if the feature is equal to that. Multi-hot One-hot but many dimensions have a 1. |

|

|

Sparse representation |

Only consider and store non zero values in one-hot or multi-hot vectors (so if feature is street name and there are a million streets, the vector doesn't have to have a million dimensions) |

|

|

Z score |

Scaling using stdevs from the mean Scaledvalue = (val - mean) / stdev Usually between -3 and 3 |

|

|

Code to create buckets from lattitude using pandas |

|