![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

113 Cards in this Set

- Front

- Back

|

Difference between sequence and list? |

Sequence is an abstraction of a list. It is not bound to a particular representation. It provides a first, rest, and a way to check if sequence is empty. |

|

|

Difference between list and sequence?

|

List is a concrete representation of a sequence of elements. Each node of the list has a data and a next pointer. A sequence is anything that can tell you what is the first and rest. |

|

|

What happens if you call next or rest on a vector?

|

You get a sequence of all but the first.

|

|

|

Why does clojure convert concrete representations to sequences? |

To make built in clojure functions more flexible. Count and map can be called on anything that can be converted to a sequence. That way you don't have to worry about representations. |

|

|

What does (rest [1 2 3 4]) return?

|

Sequence (2 3 4)

|

|

|

Difference between sequence and iterator? |

Both are abstractions and model data having a first and rest. |

|

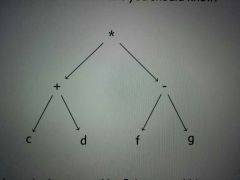

Tree traversal: pre-order. Can you reconstruct?

|

* + c d - f g

Can reconstruct if can distinguish leaves from nodes. |

|

Tree traversal: inorder. Can you reconstruct?

|

c + d * f - g

Cannot reconstruct without parenthesis |

|

Tree traversal: postorder. Can you reconstruct? |

c d + f g - *

Can reconstruct if can distinguish leaves from nodes. |

|

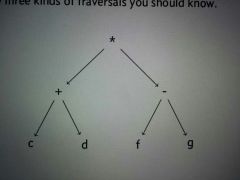

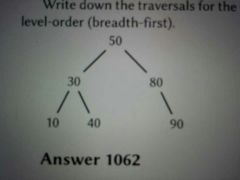

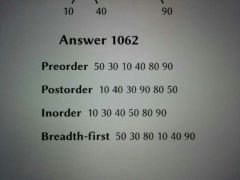

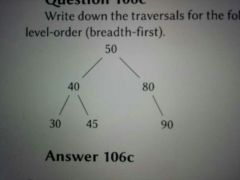

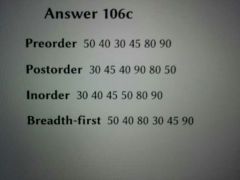

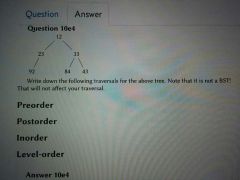

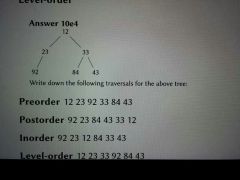

Pre-order, postorder, inorder, and breath first for following: |

|

|

|

Advantage and disadvantage of depth first search.

|

* takes little memory

* will not work for infinite trees or backdoor |

|

|

Advantage and disadvantage of breadth first search

|

*BFS will always return closest match to root

*will not be messed up by infinity *takes up more memory |

|

|

If given pre-order traversal of a tree, what do we need to know to generate the tree? |

Which nodes are leaves and how many children branches we have.

|

|

|

Inorder cannot generally be used to reconstruct a tree. Why?

|

Depth information is destroyed. Don't know which parts will be parenthesized.

|

|

Pre-order, inorder, postorder and breadth first |

|

|

|

|

|

|

What is the principle of locality?

|

If something is accessed, it will be accessed again in the near future or something close to it will be accessed in the near future.

|

|

|

What is temporal locality?

|

If something is accessed, it will likely be accessed in the near future.

Take advantage of it by copying it into a cache or moving it to a part of a data structure that has a faster access time. |

|

|

What is spatial locality?

|

When something is accessed, things near it will likely be accessed in the future.

Take advantage by when something is accessed, make things near it be more easy to access. |

|

|

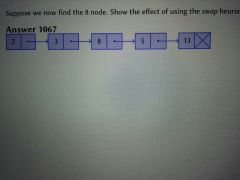

What is the move to front heuristic for ordering a list?

|

When an element is accessed, it should immediately be relocated to the head of the list.

|

|

|

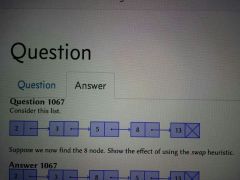

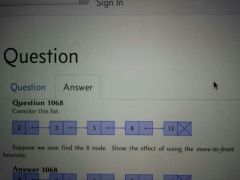

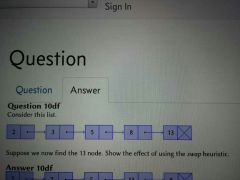

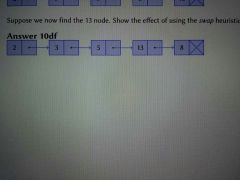

What is the swap heuristic for ordering a list?

|

Whenever an element is accessed, things to is moved one element closer to the front. |

|

|

|

|

|

|

|

|

|

|

|

Name tow algorithms that can provide useful data if interrupted

|

Selection sort, heap sort, bubble sort

|

|

|

Algorithms that work well if data given to them is already sorted.

|

Insertion sort, bubble sort

|

|

|

8 6 9 13 4 5 2

What will be the first 3 elements if you run selection sort on this array? Insertion sort? |

2 4 5 for selection |

|

|

4 1 9 3 8 7 5

First 3 elements picked for insertion? Selection? |

4 1 9

1 3 4 |

|

|

Special ability of selection sort?

|

Can be interrupted before completion and still have a partially sorted array |

|

|

Special ability of insertion sort?

|

Runs o(n) if data is already sorted.

Linear if data is sorted. |

|

|

Special ability of bubble sort

|

Runs linear if data is sorted.

|

|

|

Average time complexity of selection sort?

|

O(n^2) |

|

|

Average time complexity of insertion sort?

|

O(n^2)

|

|

|

Average time complexity of insertion sort?

|

O(n^2)

|

|

|

6 1 9 11 7 3 4

First 3 selection sort and insertion sort |

1 3 4

6 1 9 |

|

|

How does selection sort work? Specialty?

|

Pick smallest in whole array, put in place. Pick second smallest in whole array, put in place. That's why it's special is it keeps data sorted even if interrupted.

|

|

|

Insertion sort: how does it work? Special?

|

From First element, find smallest, put in place. From first 2 elements, find smallest and put in place. Repeat. Special is it runs linear if data is sorted.

|

|

|

Quicksort

|

Low pointer looks for higher than pivot

High pointer looks for lower than pivot |

|

|

Which algorithm suffers in performance if the data is already sorted? How to fix?

|

Quicksort. Fix with random pivot.

|

|

|

Quicksort

53 42 69 31 77 33 94 |

31 42 33 53 77 69 94

|

|

|

Quicksort

4 2 7 9 5 1 3 |

1 2 3 4 5 9 7

|

|

|

Normal time complexity of quicksort?

|

O(n logn)

|

|

|

How to avoid worst case of quicksort?

|

Random pointer

|

|

|

Why does merge sort need extra space when sorting arrays? What happens if not provided?

|

Without extra space, merge sort will be forced to use to shift elements, which will make it n^2

|

|

|

Quicksort |

17 23 29 41 32 33 24 |

|

|

expected time complexity inserting into hash table? |

O(1) |

|

|

Worst case time complexity for inserting into Hash? |

O(n) |

|

|

Hash causes collision. What happens if separate chaining? |

each slot becomes a linked list. Collisions go int he linked list. |

|

|

Hash causes collision. What happens if linear probing? |

algorithm adds one to the slot number until an empty slot is found. |

|

|

Hash causes collision. What happens if quadratic probing? |

adds squares to slot number |

|

|

Hash causes collision. What happens if double hashing? |

use separate hash and add multiples of offset |

|

|

Hash tables: what is primary clustering? what probing causes it? |

linear probing when collisions happen. Future insertions are likely to cause cluster to grow.

|

|

|

Hash tables: secondary clustering? what causes it? |

quadratic sorting. Algorithm adds successive squares, and probing will have to revisit previous collisions |

|

|

linear probing: x h(x) a 2 b 4 c 0 d 2 e 2 f 3 |

0 c 1 2 a 3 d 4 b 5 e 6 f |

|

|

quadric probing x h(x) a 2 b 4 c 0 d 2 e 2 f 3 |

0 c 1 2 a 3 d 4 b 5 f 6 e |

|

|

double hashing x h(x) a 2 b 4 c 0 d 2 e 2 f 3 |

0 c 1 e 2 a 3 f 4 b 5 d 6 |

|

|

linear probing: x h(x) a 3 b 4 c 1 d 3 e 3 f 2 |

0 1 c 2 f 3 a 4 b 5 d 6 e |

|

|

if deleting from hash table, we need to annotate position. Why? |

removing may cause gap in probing sequence, which would cause a failure in searching. |

|

|

what happens when deleting 2 elements from heap? 2 3 5 8 12 10

|

5 8 12 10 |

|

|

add element to heap 2 3 5 7 11 13 |

1 3 2 7 11 13 5 |

|

|

Heap operations and time complexities? |

add, delete O(log n) first O(1) |

|

|

add 1 to heap 2 3 5 8 12 10 |

1 3 2 8 12 10 5

|

|

|

add 4 to heap 2 3 5 8 12 10 |

2 3 4 8 12 10 5 |

|

|

what is the differenc ebetween heap and priority queue? |

heap is an implementation of PQ |

|

|

what is an up tree? |

collection of nodes. Each node has a pointer set to its reprsentative. Uptrees support find and union, and both of these are very fast |

|

|

how does up tree compression algorithm work? |

whenever find is called, upon reterning it sets the current pointer to point to the result of the find returns |

|

|

For a persistent list, what is the time complexity of inserting something at the end of a list assuming we have a last pointer? |

O(n) –– The last pointer does not matter, because we still have to copy the list. |

|

|

For a persistent list, what is the time complexity of inserting something at the end of a list?

|

O(n)

|

|

|

Suppose we have a list A and wish to create a new list B that is identical, except the first element will be changed. If use a persistent list, what will be the space complexity?

|

O(1) –– We need to create one cell to represent the changed element, but we can share the remaining elements with the original list.

|

|

|

For a persistent list, what is the time complexity of deleting something from the beginning of a list?

|

O(1)

|

|

|

For a persistent list, what is the time complexity of deleting something from the end of a list, assuming we have a last pointer?

|

O(n) –– The last pointer does not help because we still need to copy the list.

|

|

|

For a persistent list, what is the time complexity of deleting something from the end of a list?

|

O(n)

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/78/28/6887828_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/78/46/6887846_m.png

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/78/52/6887852_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/78/58/6887858_m.png

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/81/37/6888137_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/81/43/6888143_m.png

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/81/61/6888161_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/81/64/6888164_m.png

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/81/88/6888188_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/81/94/6888194_m.png

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/82/06/6888206_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/82/12/6888212_m.png

|

|

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/82/24/6888224_m.png

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/82/27/6888227_m.png

|

|

|

Write a function in Clojure that will take a list and return the sum of all its elements. You may use the defrecord type we did in class, or Clojure's built–in lists.

|

//fce-study.netdna-ssl.com/2/images/upload-flashcards/88/83/02/6888302_m.png |

|

|

Suppose we have a list A and wish to create a new list B that is identical, except the first element will be changed. If we use a mutable list, what will be the space complexity?

|

O(n)––We need to create one cell to represent the changed element, but we also need to copy the original list. Otherwise, changes made to the original list will be visible in our copy.

|

|

|

For a mutable list, what is the time complexity of inserting something at the beginning of a list?

|

O(1)

|

|

|

For a mutable list, what is the time complexity of inserting something at the end of a list, assuming we have a last pointer?

|

O(1)

|

|

|

For a mutable list, what is the time complexity of inserting something at the end of a list?

|

O(n) |

|

|

For a mutable list, what is the time complexity of deleting something from the beginning of a list?

|

O(n)

|

|

|

For a mutable list, what is the time complexity of deleting something from the end of a list, assuming we have a last pointer?

|

O(n)––The last pointer does not help because we have to back it up" one step, and going backwards in a singly–linked list is O(n)." |

|

|

For a mutable list, what is the time complexity of deleting something from the end of a list?

|

O(n)

|

|

|

In a doubly–linked list, what is the purpose of a sentinel?

|

A sentinel takes the place of of null pointers, and allows the same code to handle both interior nodes and edge cases. This simplifies code.

|

|

|

What is the advantage of doubly–linked lists over singly–linked list?

|

A doubly–linked list allows the program to traverse the list backwards quickly.

|

|

|

If you only have persistent data, but want to be able to traverse a list forwards and backwards quickly (like you can in a doubly–linked list), what can you do about it?

|

You can use a list zipper to handle this functionality.

|

|

|

What advantage does tail recursion confer to the programmer? |

Tail recursion allows the compiler to reuse the stack frame of a function when it makes its recursive call. This transforms the recursive function internally into an efficient loop. |

|

|

Explain briefly how to implement a stack using arrays (vectors). How are push and pop implemented?

|

You need a vector for the stack, and an integer for the top of stack pointer. This pointer will point to the first available entry in the stack.

|

|

|

Explain briefly how to implement a stack using lists. How are push and pop implemented?

|

You can implement a stack with a list by inserting at the front to implement push and deleting from the front to implement pop. |

|

|

For a persistent list, what is the time complexity of inserting something at the end of a list assuming we have a last pointer?

|

O(n) -- The last pointer does not matter, because we still have to copy the list.

|

|

|

For a persistent list, what is the time complexity of inserting something at the end of a list?

|

O(n)

|

|

|

Suppose we have a list A and wish to create a new list B that is identical, except the first element will be changed. If use a persistent list, what will be the space complexity? |

O(1) -- We need to create one cell to represent the changed element, but we can share the remaining elements with the original list.

|

|

|

For a persistent list, what is the time complexity of deleting something from the beginning of a list? |

O(1)

|

|

|

For a persistent list, what is the time complexity of deleting something from the end of a list, assuming we have a last pointer?

|

O(n) -- The last pointer does not help because we still need to copy the list.

|

|

|

For a persistent list, what is the time complexity of deleting something from the end of a list?

|

O(n)

|

|

|

Suppose we have a list A and wish to create a new list B that is identical, except the first element will be changed. If we use a mutable list, what will be the space complexity?

|

O(n)--We need to create one cell to represent the changed element, but we also need to copy the original list. Otherwise, changes made to the original list will be visible in our copy.

|

|

|

For a mutable list, what is the time complexity of inserting something at the beginning of a list? |

O(1)

|

|

|

For a mutable list, what is the time complexity of inserting something at the end of a list, assuming we have a last pointer? |

O(1)

|

|

|

For a mutable list, what is the time complexity of inserting something at the end of a list?

|

O(n)

|

|

|

For a mutable list, what is the time complexity of deleting something from the beginning of a list?

|

O(n)

|

|

|

For a mutable list, what is the time complexity of deleting something from the end of a list, assuming we have a last pointer?

|

O(n)--The last pointer does not help because we have to "back it up" one step, and going backwards in a singly-linked list is O(n).

|

|

|

For a mutable list, what is the time complexity of deleting something from the end of a list? |

O(n)

|

|

|

In a doubly-linked list, what is the purpose of a sentinel?

|

A sentinel takes the place of of null pointers, and allows the same code to handle both interior nodes and edge cases. This simplifies code.

|

|

|

What is the advantage of doubly-linked lists over singly-linked list?

|

A doubly-linked list allows the program to traverse the list backwards quickly.

|

|

|

If you only have persistent data, but want to be able to traverse a list forwards and backwards quickly (like you can in a doubly-linked list), what can you do about it?

|

You can use a list zipper to handle this functionality.

|

|

|

What advantage does tail recursion confer to the programmer?

|

Tail recursion allows the compiler to reuse the stack frame of a function when it makes its recursive call. This transforms the recursive function internally into an efficient loop.

|

|

|

What are the three stack operations? Give their time complexities.

|

The three operations are push, pop, and top. They all take O(1) time.

|

|

|

Explain briefly how to implement a stack using arrays (vectors). How are push and pop implemented?

|

You need a vector for the stack, and an integer for the top of stack pointer. This pointer will point to the first available entry in the stack.

|

|

|

Explain briefly how to implement a stack using lists. How are push and pop implemented?

|

You can implement a stack with a list by inserting at the front to implement push and deleting from the front to implement pop. |