![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

21 Cards in this Set

- Front

- Back

|

Optimization Techniques

|

A collection of techniques for determination of the best (optimal) course of action of a decision problem under the restriction of limited resources and other constraints

|

|

|

Constrained vs unconstrained optimization

|

unconstrained optimization has no constraint on the region of search

|

|

|

Unimodal vs bimodal functions

|

Unimodal functions: have a single extreme value

Bimodal functions: have two extreme values |

|

|

Where to look for extreme values

|

boundaryies of ourfunction

discontinuities in our function |

|

|

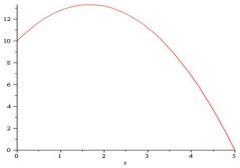

Concave

|

ANY line connecting two points on the objective function surface lies entirely below the surface

|

|

|

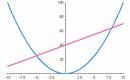

Convex

|

ANY line connecting two points on the objective function surface lies entirely above the surface

|

|

|

convex set

|

any two points on the boundary of the search region can be connected by a straight line that lies ENTIRELY IN the region

|

|

|

Unrestricted functions of a single variable

(problem) |

find minimum/maximum of y=f(x)

|

|

|

Unrestricted functions:

Condition for extreme value |

if

f ' (x)=0 then x=a may be an extreme value |

|

|

Unrestricted functions:

How to determine if a possible extreme value is a maximum or minimum |

minimum:

f " (a) > 0 maximum: f " (a) < 0 if f '' (a) = 0 and f ''' (a) = 0 then examine f '''' (a) |

|

|

Unrestricted functions in multiple variables:

steps for solving |

1) ∆'y=0 --> ∂y/∂xi=0 for all i

(this gives us our possible extreme of x*=(x1*,x2*,...)) 2) A= Hessian 3) A1=a11 A2= det(B2) where B2= [a11 a12 a21 a22] A3=det[B3] where B3= [a11 a12 a13 a21 a22 a23 a31 a32 a33] etc. 3) minimum: A1>0, A2>0, A3>0, ... maximum: A1<0, A2>0, A3<0, ... |

|

|

Hessian

|

A=

[a11 a21 a31 a12 a22 a32 a12 a23 a33] where aij=d^2(y)/(dxi*dxj) i=row j=column |

|

|

Newton Raphson Method

|

1) select x0

2) evaluate F^k and Jacobian, J^k, and solve J^k*z=-F^k for z (z is defined as z=x^(k+1)-x^k) 3) if |z|<ε stop repeating we use x^(k+1) x^(k+1)=z+x^k 4) otherwise go to step 2) |

|

|

Jacobian Matrix

|

J^k=

[df1/dx1 df1/dx2 ... df1/dxn df2/dx1 df2/dx2 ... df2/dxn . . . dfm/dx1 dfm/dx2 ... dfm/dxn] |

|

|

Kuhn Tucker

|

conditions that are necessary for a solution in NON-linear programming to be optimal

|

|

|

Lagrange Multiplier

|

a method for finding the maximum/minimum of a function subject to constraints

|

|

|

Newton Raphson

|

method for finding successively better approximations to the zeros (or roots) of a real-valued function

|

|

|

Lagrange Multiplier

(step by step method) |

|

|

|

Sufficient Conditions for Kuhn Tucker Method

|

*Maximum (ie not a minimum):

Concave y Constraints form a convex set *Minimum (ie not a maximum): Covex y Constraints form a convex set |

|

|

Method of Slack Variables

(use) |

Used to change an inequality constraint into an equality constraint

|

|

|

Method of Slack Variables

(step by step) |

(1) put constraint in gi<=0 form

(2) add a new x_(k+1)^2 variable and set =0 instead of <=0 gi+x_(k+1)^2=0 (3) these are your new constraints proceed with previous methods |