![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

79 Cards in this Set

- Front

- Back

|

control variable |

potential variable that an experimenter holds constant on purpose e.g.: colored ID numbers were all same size, tests were all the same, same experimenters ran each session, etc. |

|

|

comparison group |

group in experiment whose level on the independent variable differs from those of the treatment group in some intended and meaningful way e.g. green vs. black ink |

|

|

control group |

level of an independent variable that is intended to represent "no treatment" or a neutral condition |

|

|

treatment group |

participants in an experiment who are exposed to the level of the independent variable that involves a medication, therapy or intervention |

|

|

placebo group |

when control group is exposed to an inert treatment such as a sugar pill |

|

|

confounds |

potential alternative explanation for a research finding (threat to internal validity) |

|

|

design confound |

2nd variable happens to vary systematically along with the independent variable and therefore is an alternative explanation for results e.g. more appetizing pasta in large bowl than in medium |

|

|

systematic variability |

levels of a variable coinciding in some predictable way with experimental group membership, creating a potential confound e.g.: attitude of research assistants toward one group—large vs. medium bowl groups |

|

|

unsystematic variability |

When levels of a variable fluctuate independently of experimental group membership, contributing to variability within groups e.g.: if research assistants' demeanor was random or haphazard toward both groups |

|

|

selection effects |

occurs in an experiment when the kinds of participants in one level of the independent variable are systematically different from those in the other (different IV groups have different types participants) can occur when experimenters let participants choose which group they want to be in |

|

|

random assignment |

the use of a random method (e.g. flipping a coin) to assign participants into different experimental groups ensures that every participant in an experiment has an equal chance to be in each group |

|

|

matched groups |

participants who are similar on some measured variable are grouped into sets; numbers of each matched set are then randomly assigned to different experimental conditions |

|

|

demand characteristic |

threat to internal validity that occurs when some cue leads participants to guess a study's hypotheses or goals |

|

|

posttest-only design |

participants are randomly assigned to independent variable groups and are tested on the dependent variable once |

|

|

pretest/posttest design |

participants are randomly assigned to at least two groups and are tested on the key dependent variable twice—once before and once after exposure to independent variable used when they want to evaluate whether a random assignment made the groups equal helps track how participants have changed overtime in response to manipulation |

|

|

repeated measures design |

type of within-groups design in which participants are measured on a dependent variablemore than once—that is, after exposure to each level of the independent variable. |

|

|

Within-Groups design |

each participant is presented with all levels of the independent variable ADVANTAGES: allows researchers to treat each participant as his or her own control, and require fewer participants than independent-groups design (aka between subjects). WGD also present the potential for order effects and demand characteristics *equivalent comparison (same subjects receive both levels of IV *increased power (more likely to get statistically significant results) *less participants DISADVANTAGES: *order effects *not possible to compare multiple possibilities within the same sample * demand characteristics (participants can guess the hypotheses) |

|

|

between-subjects design |

different groups of participants are exposed to different levels of the independent variable, such that each participant experiences only one level of the independent variable ADVANTAGES: Random assignment or matched groups can help establish internal validity in independent-groups designs by minimizing selection effects |

|

|

manipulation check

|

in an experiment, an extra dependent variable researchers can include to determine how well an experimental manipulation worked

|

|

|

pilot study

|

a study completed before (or sometimes after) the study of primary interest, usually to test the effectiveness or characteristics of the manipulaitons

|

|

|

order effects

|

threat to internal validity in which exposure to one condition changes participants' responses to a later condition (alternative explanation b/c outcome might be caused by IV, but it might also be caused by order of levels of variables presented) |

|

|

practice effects

|

type of order effect in which people's performance improves over time b/c they become practiced at the dependent measure (not b/c of manipulation or treatment).

|

|

|

carryover effects

|

a type of order effect, in which some form of contamination carries over from one condition to the next

|

|

|

counterbalancing

|

in a repeated-measures experiment, presenting the levels of the independent variable to participants in different sequences to control for order effects

|

|

|

full counterbalancing

|

a method of counterbalancing in which all possible condition orders are represented

|

|

|

partial counterbalancing

|

a method of counterbalancing in which some, but not all, of the possible condition orders are represented

|

|

|

Latin square

|

formal system of partial counterbalancing that ensures that each condition in a within-groups design appears in each position at least once

|

|

|

one-group, pretest/posttest design

|

experiment in which a researcher recruits one group of participants; measures them on a pretest; exposes them to a treatment, intervention, or change; and then measures them on a posttest

|

|

|

maturation

|

an experimental group improves over time only because of natural development or spontaneous improvement ex: Rambunctious boys settle down as they get used to the camp setting. ?'s: Did the researchers use a comparison group of boys who had an equal amount of time to mature but who did not receive the treatment? |

|

|

history

|

an exp. group changes over time because of an external factor or event that affects all or most members of the group ex: dormitory residents use less air conditioning in November than September because the weather is cooler ?'s: Did the researchers include a comparison group that had an equal exposure to the external event but did not receive the treatment? |

|

|

regression to the mean

|

exp. group whose average is extremely low (or high) at pretest will get better (or worse) over time, because the random events that caused the extreme pretest scores do not recur the same way at posttest ex: A group's average is extremely depressed at pretest, in part because some of the people volunteered for therapy when they were feeling much more depressed than usual ?'s: Did the researchers include a comparison group that was equality extreme at pretest but did not receive the therapy? |

|

|

attrition

|

exp. group changes over time, but only because the most extreme cases have systematically dropped out and their scores are not included in the posttest ex: because the most rambunctious boy in the cabin leaves camp early, his disruptive behavior affects the pretest mean but not the posttest mean. ?'s: Did the researchers compute the pretest and posttest scores with only the final sample included, removing any dropouts' data from the pretest group average? |

|

|

testing

|

a type of order effect: An experimental group changes over time because repeated testing has affected the participants. Subtypes include fatigue effects and practice effects ex: a classroom's math scores improve only because the students take the same version of the test both times and therefore are more practiced at posttest ?'s: Did the researchers have a comparison group take the same two tests? Did they use a posttest-only design, or did they use alternative forms of the measure for the pretest and posttest? |

|

|

instrumentation

|

exp. group changes over time, but only because repeated measurements have changed the quality of the measurement instrument. ex: Coders get more lenient over time, so the same exact behavior is coded as less rambunctious at posttest than at pretest. researchers ?'s: Did the researcehrs train coders to use the same standards when coding? Are pretest and posttest measures demonstrably equivalent? |

|

|

observer bias

|

exp group's ratings differ from a comparison group's, but only because the researcher expects the groups' ratings to differ. ex: The researcher expects a low-sugar diet to decrease the campers' ' unruly behavior, so he notices only their calm behavior and ignores the wild behavior. observe r?'s: Were the observers of the dependent variable unaware of which condition participants were in? (A comparison group does not automatically get rid of the problem of oberserver bias.) |

|

|

design confound (cont.)

|

A second variable that unintentionally varies systematically with the independent variable ex: If pasta served in a large bowl appeared more appetizing than pasta served in a medium bowl ?'s: Did the researchers turn nuisance variables into control variables, for example, keeping the paste recipe constant? |

|

|

selection effect (cont.)

|

In an independent-groups design, when the two independent variable groups have systematically different kinds of participants in them. ex: In the autism study (ch 10), some parents insisted they wanted their children to be in the intensive treatment group rather than the control group. ?'s: Did the researchers use random assignment or matched groups to equalize groups? |

|

|

order effect (cont.)

|

In a within-groups design, whjen the effect of the independent variable is confounded with carryover from ove level to the other, or with practice, fatigue, or boredom ex: All participants in a bonding study play with their own toddler, followed by a different toddler ?'s: did the researchers counterbalance the orders of presentation? |

|

|

demand characteristic (cont.)

|

participants guess what the study's purpose is and change their behavior in the expected direction. ex: Campters guess that the low-sugar diet is supposed to make them calmer, so they change their behavior accordingly. ?'s: Were the participants kept unaware of the purpose of the study? Was it an independent-groups design, which makes participants less able to guess the study's purpose? |

|

|

placebo effect (cont.)

|

Participants in an experimental group improve only because they believe in the efficacy of the therapy or drug they receive ex: Women receiving cofnitive therapy improve simply because they believe the therapy will work for them. ?'s: did a comparison group receive a placebo (inert) drug or a placebo therapy? |

|

|

double-blind placebo control study

|

study that uses a treatment group and a placebo group and in which neither the research staff nor the participants know who is in which group

|

|

|

null effect

|

a finding that an independent variable didn't make a difference the dependent variable there's no significant covariance between the two "null result" |

|

|

Reasons for null effects

|

Not enough between group difference: *weak manipulations *insensitive manipulations *ceiling/floor effect *reverse confounds *Within-group variability: measurement error, individual differences, noise |

|

|

ceiling effect

|

an experimental design problem in which independent variable groups score almost the same on a dependent variable, such that all scores fall at the high end of their possible distribution

|

|

|

floor effect

|

I.V. groups scores fall at the low end

|

|

|

noise

|

the unsystematic variability among the members of a group in an experiment aka error variance, unsystematic variance |

|

|

measurement error

|

any factor that can inflate or deflate a person's true score on a dependent measure degree to which the recorded measure for a participant on some variable different from the true value of the variable for that participant sample may be random: if over a sample they both inflate and deflate scores systematic; result in biased measurement 1. Solution 1: use reliable, precise measurements 2. Solution 2: Measure more instances |

|

|

individual difference

|

source of within-groups variability. can be problem in independent- groups design 1. Solution 1: Change the design 2. Solution 2: add more participants |

|

|

situation noise

|

unrelated events or distractions in the external environment that create unsystematic variability within groups in an experiment

|

|

|

power

|

likelihood that a study will show a statistically significant result when some effect is truly present in the population probably of not making a type II error |

|

|

Why are null effects important?

|

To eliminate internal validity and to increase a study's power

|

|

|

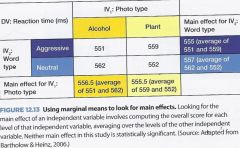

interaction effect (interaction)

|

whether the effect of the original independent variable (like cell phone use) depends on the level of another IV (driver age) allows researchers to establish whether or not "it depends" e.g.: Does the effect of cell phones depends on age? |

|

|

factorial design

|

there are two or more independent variables (factors) most common factorial design: cross two IVs; they study each possible combination of the IVs |

|

|

cells

|

a condition in an experiment; in a factorial design it represents on of the possible combinations of two independent variables |

|

|

participant variable

|

a variable whose levels are selected (i.e. measured), not manipulated e.g.: age, gender, ethnicity |

|

|

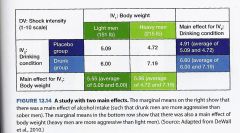

main effect

|

variablethe overall effect of one independent variable on the dependent variable, averaging over the levels of the other independent variable simple difference in factorial design w/ 2 IVs, there are 2 means/main effects |

|

|

marginal means

|

independentthe arithmetic means for each level of an independent variable averaging over the levels of the other independent variable inspect the main effects in a factorial design, and they use statistics to find out whether the difference in the marginal means is statistically significant |

|

|

finding main effects and interactions

|

|

|

|

Why do we run quasi-experiments?

|

Sometimes you can't randomly assign participants to the independent variable levels

|

|

|

quasi-experiment

|

study that is similar to an experiment except that the researchers do not have full experimental control e.g.: they may not be able to randomly assign participants to the independent variable conditions assigned to conditions by teachers, political regulations, acts of nature—or e ven by their own choice |

|

|

Nonequivalent control group design

|

Q-e that has at least: one treatment group and one comparison group, but participants have not been randomly assigned to the two groups |

|

|

Nonequivalent control group pretest/posttest design

|

Q-e that has at least one treatment group and one comparison group participants have not been randomly assigned to the two groups, and in which at least one pretest and one posttest are administered |

|

|

interrupted time series design

|

Q-e study that measures participants repeatedly on a dependent variable before, during and after the "interruption" cause by some event e.g.: decision making then food break one group only |

|

|

Nonequivalent control group interrupted time-series design

|

Q-e w/ 2 or more groups in which participants have not been randomly assigned to groups; and participants are measured repeatedly; on a dependent variable before, during and after interruption caused by some event, and the presence or timing of the interrupting event differs among the groups comparison group |

|

|

wait-list design

|

all the participants plan to receive treatment, but are assigned to do so at different times

|

|

|

small-N design

|

a study in which researchers gather info from just a few cases

|

|

|

single-N

|

study in which researchers gather info from only one animal or one person

|

|

|

stable-baseline design

|

study in which a researcher observes behavior for an extended baseline period before beginning a treatment or other intervention treatment's if behavior during the baseline is stable, the researcher is more certain of the treatemnt's effectiveness |

|

|

multiple-baseline design

|

researchers stagger their introduction of an intervention across a variety of contexts, times or situations

|

|

|

reversal design

|

researcher observes a problem behavior both with and without treatment, but takes the treatment away for a while (reversal period) to see whether the problem behavior returns (reverses).

|

|

|

replicable

|

Pertaining to a study whose results have been obtained again when the study was repeated *Would we get similar results if we did the study again with new participants from the same population? |

|

|

Direct replication

|

Same variables, same operational definitions researchers repeat original study as closely as possible to see whether the original effect shows up in the newly collected data aka exact replication |

|

|

Conceptual replication

|

Same variables (concepts), different operational definitions Researchers examine the same research question (the same conceptual variable) but use different procedures for operationalizing the variables |

|

|

Replication-plus-extension

|

Same variables (concepts), plus some new variables Researchers replicate their original study but add variables or conditions that test additional questions |

|

|

ecological validity

|

How well a study resembles to real-world situations (aka mundane realism) extent to which the tasks and manipulations of a study are similar to real-world contexts |

|

|

theory-testing mode

|

A researcher's intent for a study, testing association claims or causal claims to investigate support for a theory rigorously *goal: test theory rigourously, isolate variables *prioritize internal validity *artificial situations may be required |

|

|

generalization mode

|

The intent of researchers to generalize the findings from the samples and procedures in their study to other populations or contexts *Frequency claims *Goal: make a claim about a population *real world matters *external validity is essential |

|

|

field setting

|

A real-world setting for a research study

|

|

|

cultural psychology

|

subdiscipline of psychology concerned with how cultural settings shape a person's thoughts, feelings, and behavior, and how these in turn shape cultural settings.

|