![]()

![]()

![]()

Use LEFT and RIGHT arrow keys to navigate between flashcards;

Use UP and DOWN arrow keys to flip the card;

H to show hint;

A reads text to speech;

107 Cards in this Set

- Front

- Back

|

Random Experiment

|

An experiment where outcome varies in unpredictable fashion when repeated under same conditions

|

|

|

Sample Space

|

Set of Possible Outcomes

|

|

|

Event

|

Subset of sample space S which contains specific outcomes

|

|

|

Universal Set

|

S, contains all outcomes. Thus, always occuring

|

|

|

Null Event

|

Impossible or null event which contains no outcomes and never occurs

|

|

|

Union

|

"or" AUB=A+B ... set of outcomes that are in either A or B

|

|

|

Intersection

|

"and" AB ... set of outcomes that are in both A and B

|

|

|

Complement of an event A

|

set of all outcomes in S but not in A

|

|

|

De Morgan's Rules

|

(A+B)'=A'B'

(AB)'=A'+B' |

|

|

Mutually Exclusive (Disjoint) Events

|

AB=null space

|

|

|

Probability

|

Indicates how likely it is an event will occur

|

|

|

P(S)

|

1

|

|

|

P(A1+A2+...)

where A1, A2, etc. are disjoint events |

P(A1)+P(A2)+...

|

|

|

P(A')

|

1-P(A)

|

|

|

P(AB)

if AB is not = null set |

P(A)+P(B)-P(AB)

|

|

|

Conditional Probability

|

P(A|B).

Probability of an event A given that an event B has occurred |

|

|

P(A|B)

|

P(AB)/P(B)

|

|

|

Joint Probability

|

P(AB)=P(A|B)P(B)=P(B|A)P(A)

|

|

|

Total or Marginal Probability

|

n mutually exclusive events B1, ..., Bn

union of all events = S P(A)=P(A|B1)P(B1)+...+P(A|Bn)P(Bn) |

|

|

Baye's Rule

|

P(Bi|A)=P(ABi)/P(A)

|

|

|

Statistically Independent Events

|

The probability of the occurrence of one event is not affected by the occurrence or non-occurrence of the other event.

P(A|B)=A P(ABC)=P(A)P(B)P(C) |

|

|

Random Variable

|

A function that maps all outcomes of the sample space S into a set of real numbers.

RV X is a function that assigns a real number to each outcome in the sample space. |

|

|

Probability Distribution Function

|

Probability of the event {X<=x}

Fx(x)=P(X<=x) |

|

|

Properties of F(x):

Fx(-∞) Fx(∞) |

Fx(-∞)= P(X<-∞) = 0

Fx(∞) = P(X<∞) = 1 |

|

|

Probability Density Function

|

fx(x)=dFx(x)/dx

|

|

|

Probability Density Function (definition)

|

fx(x) = P(x < X < x+∆ x) = P(x1<X<x2)

|

|

|

Properties of density function

fx(x) is >, <, etc. 0 |

fx(x)≥0

|

|

|

Properties of density function

P(x1≤X≤x2) |

∫(fx(X)dx,x1,x2)=Fx(x2)-Fx(x1)

|

|

|

Properties of density function

Fx(x) in relation to fx(x) |

Fx(x)=P(X≤x)=∫(fx(λ)dλ,∞,X)

|

|

|

Properties of density function

∫(fx(x)dx,-∞,∞) |

(Fx(x),-∞,∞)=1

|

|

|

Delta Function

|

δ(x)=dU(x)/dx

|

|

|

unit step function

|

U(x)=0 when x<0

U(x)=1 when x>=0 |

|

|

delta function/dirac function

|

δ(x)=∞ when x=0

δ(x)=0 when x≠0 |

|

|

delta function in relation to unit step function

|

δ(x)=dU(x)/dx

|

|

|

unit step function in relation to delta function

|

U(x)=∫(δ(x)dx,-∞,X)

|

|

|

Probability Density Function in terms of Probability Distribution Function

Discrete Case |

fx(x)=dF(x)/dx=∑(Px(xi)δ(x-xi), all xi)

|

|

|

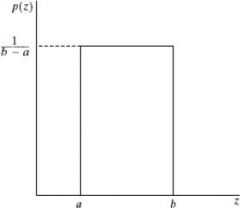

Uniform Density Function

|

f(x)= 1/(b-a) when a≤x≤b

f(x) = 0 otherwise |

|

|

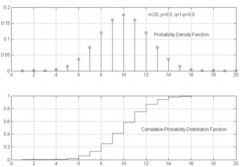

Probability Density Graph vs Probability Distribution Graph

|

Probability density would look like impulses and probability distribution would look like steps starting at lowest probability and ending at 1

|

|

|

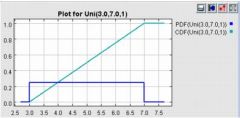

Uniform Distribution Function

|

F(x)=∫(1/(b-a)dλ, a, x) =(x-a)/(b-a)

(see green line) |

|

|

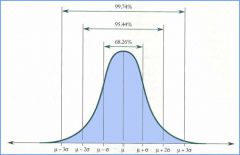

Normal (Gaussian)

|

N(μ,σ)

μ=mean=average value σ^2=variance σ=standard deviation |

|

|

Standard Normal Probability Density Function

|

N(0,1)

|

|

|

Normal Distribution: P(x1<x<x2)

|

F(x2)-F(x1)

=G((x2-μ)/σ)-G((x1-μ)/σ) (we calculate the value inside, and then use a table to find the values of G(a), G(b), etc) |

|

|

Binomial

another name |

Bernoulli Trials

|

|

|

Binomial

definition |

Composed of n identical independent trials. Each trial has two outcomes (success p or failure q). The random variable X defines the number of successes in n trials.

|

|

|

Binomial density function approximation when we have a large number of trials

|

Can be approximated withth eGaussian density function of N(np, npq)

mean = np standard deviation = npq |

|

|

Poisson

|

Counts the number of occurrences of an event in a certain time period.

Pk=P(x=k)=e^(-a)*a^k/k! where a is the average number of event occurances in the same time interval |

|

|

Approximation of binomial

|

large number of trials, small number of successes

we set avg number of event occurances a=np |

|

|

Joint Probability distribution function

|

Fxy(x1,y1)=P((X<=x1)(Y<=y1))

|

|

|

marginal distribution of y

|

Fxy(∞,y)=Fy(y)

|

|

|

marginal distribution of y

|

Fxy(x,∞)=Fx(x)

|

|

|

Joint Density Function

|

F(x,y)=∫(∫(fxy(α,β)dα,-∞,x)dβ,-∞,y)

P((x1<X<x2)(y1<Y<y2)) =∫(∫(fxy(x,y)dx,x1,x2)dy,y1,y2) |

|

|

Independent random variables

Probability Fxy(x,y) fxy(x,y) |

P((X<=x)(Y<=y))=P(X<=x)P(Y<=y)

Fxy(x,y)=Fx(x)Fy(y) fxy(x,y)=fx(x)fy(y) |

|

|

Conditional Probability Distribution Function

|

F(x|B) is conditional distribution fu of RV x assuming B is defined as the conditional probability of event X<=x

|

|

|

Expected Values:

expected value or mean of a function g(x) |

E[g(x)]=

integral from -inf. to inf. of g(x) * f(x) for continuous summation of g(xi)P(xi) |

|

|

Mean

|

E[X]=

|

|

|

Expected Values

|

E[x]= integral(xf(x)dx)

over the range of -inf to inf |

|

|

Mean

|

E[x]

|

|

|

Variance

|

σ^2,

=E[x^2(t)]-E^2[x(t)] |

|

|

Moments

|

m_n=E[x^n]

|

|

|

Standard deviation

|

σ, square root of variance

|

|

|

Covariance

|

measure of how much two variables change together

If two variables tend to vary together (that is, when one of them is above its expected value, then the other variable tends to be above its expected value too), then the covariance between the two variables will be positive. On the other hand, when one of them is above its expected value the other variable tends to be below its expected value, then the covariance between the two variables will be negative. |

|

|

Covariance

equation |

Cxy=E[XY]-E[X]E[Y]

|

|

|

Correlation

|

E[XY]=Rxy

correlation refers to the departure of two random variables from independence |

|

|

Covariance and Correlation of uncorrelated random variables

|

Covariance = Cxy=0

Correlation=Rxy=E[x]E[y] |

|

|

Orthogonality

|

Correlation = Rxy=0

|

|

|

Correlation and Independence

|

Independence will give us uncorrelatedness but not the other way around

|

|

|

Characteristic Function

|

the characteristic function of any random variable completely defines its probability distribution.

|

|

|

Stochastic Process

|

If a random variable X is a rule for assigning a number X(psi) to every outcome psi of an experiment.

A stochastic process X(t) is a rule for assigning a function X(t,psi) to every outcome psi. |

|

|

Random sequence

|

A random process for which time has only discrete values.

|

|

|

Deterministic process

|

Future values of any sample function can be predicted from past values.

|

|

|

Non deterministic process

|

Also called regular process. Consists of sample functions that cannot be predicted from past values.

|

|

|

Stationary

|

A random process (ie "stochastic process") is stationary if the joint probability distribution does not change when shifted in time or space.

|

|

|

Example of stationary process

|

White Noise

|

|

|

Properties of a stationary process

|

mean and variance do not change over time (they are constants)

F(x1,x2) depends only on difference between time samples Autocorrelation Rx(t1,t2)=Rx(t2-t1) Autocovariance Cx(t1,t2)=Cx(t2-t1) |

|

|

WSS

|

"wide sense stationary" or "weak sense stationary"

only requires the first and second moments to not vary with respect to time (so first and second moments are constant) |

|

|

Conditions to be WSS

|

E(x)=constant

Autocovariance Cx(t1,t2)=Cx(t1-t2) for all t1, t2 (ie depends only on difference between time samples) (as a result the autocorrelation Rx(t1,t2)=Rx(t2-t1)) |

|

|

Properties of WSS

Mean Variance Autocovariance Autocorrelation |

Mean: constant

Variance: constant (b/c composed of 1st and 2nd moments) Autocovariance: depends only on difference between time samples Autocorrelation: depends only on difference between time samples |

|

|

Properties of WSS

Autocorrelation |

1. Average power (2nd moment) of the process is given at τ=0

R(0)=E[x(t)^2] for all t 2) even fu of τ Rx(τ)=Rx(-τ) 3) measure of the rate of change of a random process 4) max at τ=0 |Rx(τ)|<=Rx(0) |

|

|

Second order joint distribution

|

F(x1,x2;t1,t2)=P(X(t1)<=x1, X(t2)<=x2))

|

|

|

Autocorrelation when t1=t2

|

when t1=t2=t our autocorrelation is equal to the mean squared value (or average power) of X(t)

|

|

|

Autocorrelation

|

The autocorrelation of X(t) at two time instants of t1 and t2 is the correlation between two random variables X(t1) and X(t2).

Also known as teh joint moment of X(t1) and X(t2) |

|

|

Uncorrelated

|

Random variables whose cross covariance is zero are called uncorrelated.

Uncorrelated random variables have a correlation coefficient of zero If X and Y are independent, then they are uncorrelated (but not necessarily the other way around) |

|

|

Covariance

|

E[XY]-E[X]E[Y]

covariance is a measure of how much two variables change together If two variables tend to vary together (that is, when one of them is above its expected value, then the other variable tends to be above its expected value too), then the covariance between the two variables will be positive. On the other hand, when one of them is above its expected value the other variable tends to be below its expected value, then the covariance between the two variables will be negative. |

|

|

Covariance and Correlation

|

when E[x(t)]=0

Covariance=Correlation |

|

|

Variance vs Covariance

|

variance is a special case of the covariance when the two variables X and Y are identical

|

|

|

Orthogonal

|

if the cross correlation Rxy is zero then X and Y are called orthogonal random processes

|

|

|

δ(t1-t2)

|

1 when t1=t2

0 otherwise |

|

|

Cross correlation and cross variance

|

used to distinguish from the covariance of a single variable but we loosely use the term covariance for both

Rxy=E[XY] same as Rxy(t1,t2)=E[X(t1)Y(t2)] Cxy=Rxy-E[X]E[Y] same as Cxy(t1,t2)=Rxy(t1,t2)-E[X(t1)]E[Y(t2)] |

|

|

How to calculate the autocorrelation

|

∫∫xyf(xy) dxdy, -∞,∞

double integral of the probability density function with respect to the x and y variables from -∞ to ∞ |

|

|

impulse response

|

the response of a linear time invariant system to an input unit delta function δ(t) is called the impulse response h(t)

|

|

|

causality

|

a system is said to be causal if the response at time t depends only on the present and past values of the input.

|

|

|

State Space Representation

|

Dynamic systems are represented by a system of ordinary linear differential equations.

The dependent variables become the state variables. Any physical system can be represented by the state space representation (which includes a state/plant equation and an output equation) |

|

|

State Variables

|

Dependent variables in a dynamic system represented by a system of linear differential eqns.

The state variables represent all the internal characteristics of the dynamic system. They cannot generally be measured directly (they are "hidden variables") |

|

|

Input of State Space Eqns

|

u(t)

Properties: (1) Deterministic: known or measurable by us (2) Random: known statistical properties such as mean, variance, etc. |

|

|

Output of state space eqns

|

y(t) or z(t)

known through measurements |

|

|

Form of state space equations:

|

x'(t)=Ax(t)+Bu(t)

y'(t)=Cx(t)+Du(t) OR x'(t)=F(t)x(t)+C(t)u(t) z(t)=H(t)x(t)+D(t)u(t) |

|

|

State Equation

(another name) |

Plant Equation

|

|

|

State Equation:

|

State Equation/Plant Equation

x'(t)=F(t)x(t)+C(t)u(t) F(t) is the dynamic coefficient matrix C(t) is the input coupling matrix |

|

|

Output Equation

|

z(t)=H(t)x(t)+D(t)u(t)

H(t) is the measurement sensitivity matrix D(t) is the input/output coupling matrix |

|

|

What can be measured in the state equation

|

only the inputs and outputs of the system can be measured

|

|

|

State space representation:

time invariant |

F(t)=F, H(t)=H, C(t)=C, D(t)=D

x'(t)=Fx(t)+Cu(t) z(t)=Hx(t)+Du(t) |

|

|

Fundamental Matrix

(another name) |

State transition matrix

|

|

|

State transition matrix

|

State transition matrix, Φ(t)

Transforms the initial state x(t0), of the dynamic system to the corresponding state at time t, x(t) |

|

|

Fundamental matrix

Time invariant system (equation) |

Φ(t)=e^(-At)=Laplace((sI-A)^-1)

where A=F |

|

|

Observability

(definition) |

Observability is the question of whether the states of a given dynamic system are uniquely determinable from it's inputs and outputs, given a model of the system (ie F, H, C, D).

So, given z(t) and the system model, can we find all states (ie x'(t)) of the system. |

|

|

Controllability

(definition) |

The question is wheter we can, with a given input (or control) go from one state to another state

|

|

|

Given:

x'(t)=F(t)x(t)+G(t)w(t)+C(t)u(t) z(t)=H(t)x(t)+v(t)+D(t)u(t) what is w(t) and v(t)? |

w(t): process noise

v(t): sensor or measurement noise these are usually uncorrelated zero mean processes E[w(t)]=0 E[v(t)]=0 |